Indian Express: 3 staff memos flagged ‘polarising’ content, hate speech in India but Facebook said not a problem

A spokesperson for Meta Platforms Inc said: “We had hate speech classifiers in Hindi and Bengali from 2018. Classifiers for violence and incitement in Hindi and Bengali first came online in early 2021”.

FROM a “constant barrage of polarising nationalistic content”, to “fake or inauthentic” messaging, from “misinformation” to content “denigrating” minority communities, several red flags concerning its operations in India were raised internally in Facebook between 2018 and 2020.

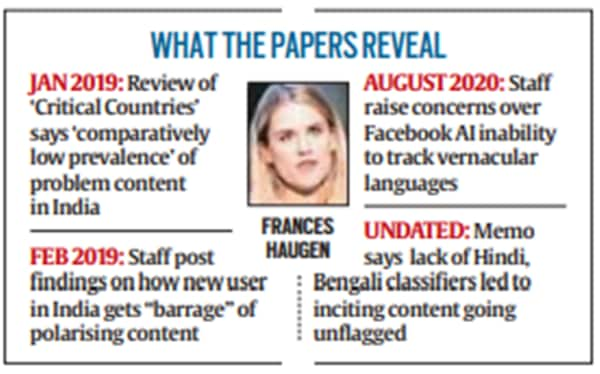

However, despite these explicit alerts by staff mandated to undertake oversight functions, an internal review meeting in 2019 with Chris Cox, then Vice President, Facebook, found “comparatively low prevalence of problem content (hate speech, etc)” on the platform.

Two reports flagging hate speech and “problem content” were presented in January-February 2019, months before the Lok Sabha elections.

A third report, as late as August 2020, admitted that the platform’s AI (artificial intelligence) tools were unable to “identify vernacular languages” and had, therefore, failed to identify hate speech or problematic content.

Two reports flagging hate speech and “problem content” were presented in January-February 2019, months before the Lok Sabha elections.

Yet, minutes of the meeting with Cox concluded: “Survey tells us that people generally feel safe. Experts tell us that the country is relatively stable.”

These glaring gaps in response are revealed in documents which are part of the disclosures made to the United States Securities and Exchange Commission (SEC) and provided to the US Congress in redacted form by the legal counsel of former Facebook employee and whistleblower Frances Haugen.

Frances Haugen, a former Facebook data scientist-turned-whistleblower, has released a series of documents that revealed that the products of the social network giant harmed mental health of teenage girls. (AP)

The redacted versions received by the US Congress have been reviewed by a consortium of global news organisations including The Indian Express.

Facebook did not respond to queries from The Indian Express on Cox’s meeting and these internal memos.

The review meetings with Cox took place a month before the Election Commission of India announced the seven-phase schedule for Lok Sabha elections on April 11, 2019.

The meetings with Cox, who quit the company in March that year only to return in June 2020 as the Chief Product Officer, did, however, point out that the “big problems in sub-regions may be lost at the country level”.

The first report “Adversarial Harmful Networks: India Case Study” noted that as high as 40 per cent of sampled top VPV (view port views) postings in West Bengal were either fake or inauthentic.

VPV or viewport views is a Facebook metric to measure how often the content is actually viewed by users.

The second – an internal report – authored by an employee in February 2019, is based on the findings of a test account. A test account is a dummy user with no friends created by a Facebook employee to better understand the impact of various features of platform.

This report notes that in just three weeks, the test user’s news feed had “become a near constant barrage of polarizing nationalistic content, misinformation, and violence and gore”.

The test user followed only the content recommended by the platform’s algorithm. This account was created on February 4, it did not ‘add’ any friends, and its news feed was “pretty empty”.

According to the report, the ‘Watch’ and “Live” tabs are pretty much the only surfaces that have content when a user isn’t connected with friends.

“The quality of this content is… not ideal,” the report by the employee said, adding that the algorithm often suggested “a bunch of softcore porn” to the user.

Over the next two weeks, and especially following the February 14 Pulwama terror attack, the algorithm started suggesting groups and pages which centred mostly around politics and military content. The test user said he/she had “seen more images of dead people in the past 3 weeks than I have seen in my entire life total”.

Responding to a specific query on the basis of conclusions presented in the review meeting with Cox, a spokesperson for Meta Platforms Inc said: “Our teams have developed an industry-leading process of reviewing and prioritizing which countries have the highest risk of offline harm and violence every six months. We make these determinations in line with the UN Guiding Principles on Business and Human Rights and following a review of societal harms, how much Facebook’s products impact these harms and critical events on the ground.”

Facebook group was rebranded on October 28 as Meta Platforms Inc bringing together various apps and technologies under a new company brand.

On whether the findings of the study — that pointed out lack of Hindi and Bengali classifiers was leading to violence and inciting content — were taken into account before the conclusions presented in the review with Mr Cox were made, the spokesperson said: “We invest in internal research to proactively identify where we can improve – which becomes an important input for defining new product features and our policies in the long-term. We had hate speech classifiers in Hindi and Bengali from 2018. Classifiers for violence and incitement in Hindi and Bengali first came online in early 2021”.

Specifically on the test user analysis, the company’s spokesperson reiterated its earlier statement and said that the “exploratory effort of one hypothetical test account inspired deeper, more rigorous analysis of our recommendation systems, and contributed to product changes to improve them”. “Product changes from subsequent, more rigorous research included things like the removal of borderline content and civic and political Groups from our recommendation systems. Separately, our work on curbing hate speech continues and we have further strengthened our hate classifiers, to include 4 Indian languages,” they added.

Facebook had in October told The Indian Express it had invested significantly in technology to find hate speech in various languages, including Hindi and Bengali.

“As a result, we’ve reduced the amount of hate speech that people see by half this year. Today, it’s down to 0.05 percent. Hate speech against marginalized groups, including Muslims, is on the rise globally. So we are improving enforcement and are committed to updating our policies as hate speech evolves online,” a Facebook spokesperson had said.

However, the issue of Facebook’s algorithm and proprietary AI tools being unable to flag hate speech and problematic content urfaced in August 2020, when employees questioned the company’s “investment and plans for India” to prevent hate speech content.

“From the call earlier today, it seems AI (artificial intelligence) is not able to identify vernacular languages and hence I wonder how and when we plan to tackle the same in our country? It is amply clear what we have at present, is not enough,” another internal memo said.

The memos are a part of a discussion between Facebook employees and senior executives. The employees questioned how Facebook did not have “even basic key work detection set up to catch” potential hate speech.

“I find it inconceivable that we don’t have even basic key work detection set up to catch this sort of thing. After all cannot be proud as a company if we continue to let such barbarism flourish on our network,” an employee said in the discussion.

The memos reveal that employees also asked how the platform planned to “earn back” the trust of colleagues from minorities communities, especially after a senior Indian Facebook executive had on her personal Facebook profile, shared a post which many felt “denigrated” Muslims.

The Meta spokesperson however said that the assertion that Facebook’s AI was not able to identify vernacular language was incorrect. “We added hate speech classifiers in Hindi and Bengali starting in 2018. In addition, we have hate speech classifiers in Tamil and Urdu,” they said in response to a query.

This article first appeared in Indian Express